Talk: Andrej Karpathy: Software Is Changing (Again)

Andrej Karpathy's keynote at AI Startup School in San Francisco about how LLMs are introducing a 3rd generation of software

Andrej Karpathy: Software Is Changing (Again)

Andrej Karpathy's keynote at AI Startup School in San Francisco.

Overview

Andrej Karpathy is a machine learning research scientist and a founding member at OpenAI, former Sr. Director of AI at Tesla.

Earlier this year he gave a fascinating the keynote talk at Y Combinator's "AI Startup School in San Francisco" where he talks about the concept of "software 3.0".

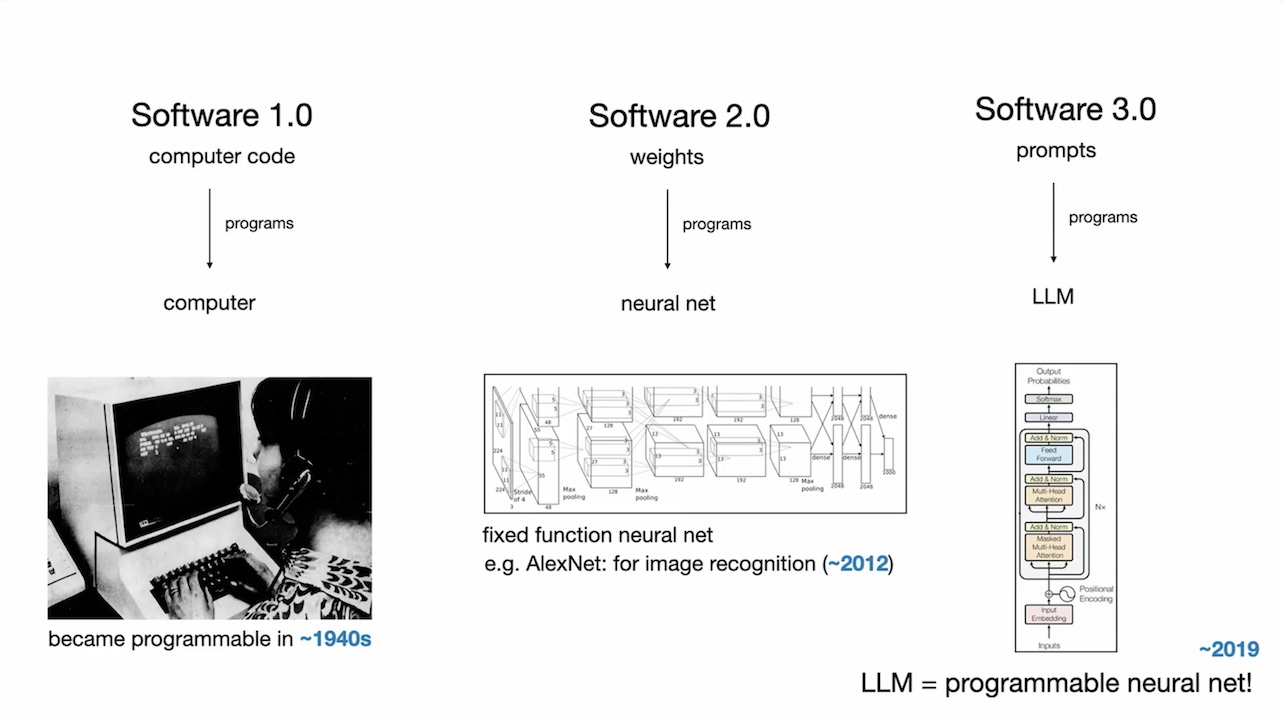

The idea is software 1.0 was normal code.

Software 2.0 is machine learning models, which have started being deployed on mass in the last few years.

And now software 3.0 with LLMs. An entire new generation of software.

Talk Chapters

0:00 Imo fair to say that software is changing quite fundamentally again. LLMs are a new kind of computer, and you program them in English. Hence I think they are well deserving of a major version upgrade in terms of software.

6:06 LLMs have properties of utilities, of fabs, and of operating systems => New LLM OS, fabbed by labs, and distributed like utilities (for now). Many historical analogies apply - imo we are computing circa ~1960s.

14:39 LLM psychology: LLMs = "people spirits", stochastic simulations of people, where the simulator is an autoregressive Transformer. Since they are trained on human data, they have a kind of emergent psychology, and are simultaneously superhuman in some ways, but also fallible in many others. Given this, how do we productively work with them hand in hand?

Switching gears to opportunities...

18:16 LLMs are "people spirits" => can build partially autonomous products.

29:05 LLMs are programmed in English => make software highly accessible! (yes, vibe coding)

33:36 LLMs are new primary consumer/manipulator of digital information (adding to GUIs/humans and APIs/programs) => Build for agents!

Talk Summary:

Overall Talk Topic

Andrej Karpathy presents a case that software is undergoing a foundational transformation. Large Language Models (LLMs) represent a new kind of computer—programmable in natural language. This shift is significant enough to justify calling it a major new version of software: “Software 3.0.” The talk explores the architecture of LLMs, their emergent psychology, the nature of current opportunities, and the best strategies for building with and for them.

Section 1: Software is Fundamentally Changing (0:49)

LLMs are not just models—they are a new type of computer. They are programmable in English and offer capabilities that differ fundamentally from traditional imperative or neural-network-based software. Given this new paradigm, Karpathy suggests it’s appropriate to upgrade our versioning of software entirely. Software 1.0 was classical logic, Software 2.0 was neural networks with learned weights, and Software 3.0 is built with LLMs using natural language as the programming layer.

Section 2: LLMs as Utilities, Fabs, and Operating Systems (6:06)

Karpathy describes LLMs as being akin to utilities (like electricity), chip fabs (expensive to produce, cheap to use), and operating systems (platforms with APIs and abstractions). Like 1960s computing, most people now use thin clients to access expensive centralized models. While they are currently cloud-hosted utilities, a personal computing model may re-emerge as smaller models become locally deployable.

Section 3: LLM Psychology (14:39)

LLMs behave like "people spirits"—simulations of human minds with a psychology shaped by their training data. They possess strengths like encyclopedic recall and creative problem-solving, but also flaws such as hallucinations, inconsistency, and poor memory. They lack persistence and self-awareness. Effective collaboration with LLMs requires tools that accommodate their abilities while mitigating their weaknesses, especially with tight generate/verify cycles and human oversight.

Section 4: Opportunities: Partially Autonomous Products (18:16)

Because LLMs simulate people, they can drive new forms of human-computer interaction. Products like Cursor and Perplexity wrap LLMs in interfaces that provide automated context, diff-based UIs for human review, and autonomy sliders that balance between user control and model initiative. The most successful applications will support a human-in-the-loop dynamic, allowing LLMs to act semi-independently within guardrails.

Section 5: English as Code – Natural Language Interfaces (29:05)

Programming LLMs in English democratizes access to software creation. Karpathy describes how “vibe coding” and “byte coding” let even non-programmers generate useful applications. However, core infrastructure (e.g., payments, auth) remains complex. While building an iOS app or web tool is now faster than ever, operations are still a bottleneck, suggesting where further automation or tooling improvements could help.

Section 6: Building for Agents (33:36)

LLMs are now a primary manipulator of digital content, joining humans (via GUIs) and traditional programs (via APIs). Developers should design with agents in mind: create markdown-readable interfaces, machine-consumable documentation (like llms.txt), and avoid GUI-only affordances. Early examples like git.ingest and DeepWiki reveal how small design tweaks can expose functionality to agents and unlock powerful behaviors.

Conclusion

LLMs mark the beginning of a new software paradigm. Though they are not perfect, they are powerful and increasingly central to both consumer and developer workflows. Over the next decade, software will shift toward partially autonomous, LLM-driven agents, and the developers who embrace Software 3.0’s strengths—while designing robust human-AI workflows—will define the next era of computing.

Notes

- Outages at leading LLM providers act like an “intelligence brownout,” instantly lowering global productivity.

- Unlike electricity or GPS, LLMs diffused consumer-first; enterprises and governments are still catching up.

- The ability to run small models on a Mac Mini hints at a coming personal-compute era once inference costs fall.

- Tesla’s experience shows entire swaths of C++ code can be deleted as neural nets, and soon prompts, absorb that functionality.

YouTube Description

Drawing on his work at Stanford, OpenAI, and Tesla, Andrej sees a shift underway. Software is changing, again. We’ve entered the era of “Software 3.0,” where natural language becomes the new programming interface and models do the rest.

He explores what this shift means for developers, users, and the design of software itself— that we're not just using new tools, but building a new kind of computer.

More content from Andrej: / @andrejkarpathy

Chapters on YouTube

00:00 - Intro

01:25 - Software evolution: From 1.0 to 3.0

04:40 - Programming in English: Rise of Software 3.0

06:10 - LLMs as utilities, fabs, and operating systems

11:04 - The new LLM OS and historical computing analogies

14:39 - Psychology of LLMs: People spirits and cognitive quirks

18:22 - Designing LLM apps with partial autonomy

23:40 - The importance of human-AI collaboration loops

26:00 - Lessons from Tesla Autopilot & autonomy sliders

27:52 - The Iron Man analogy: Augmentation vs. agents

29:06 - Vibe Coding: Everyone is now a programmer

33:39 - Building for agents: Future-ready digital infrastructure

38:14 - Summary: We’re in the 1960s of LLMs — time to build

References:

- Andrej Karpathy: Software Is Changing (Again) - (YouTube)

- Andrej Karpathy: Software Is Changing (Again) - (Y Combinator, on x)

- First Andrej Karpathy Tweet

- Second Andrej Karpathy Tweet

- Slides Keynote

- Slides PDF

- Software 2.0 blog post from 2017

- How LLMs flip the script on technology diffusion

- Vibe coding MenuGen (retrospective)

- His YouTube Channel